Over the past years, the internet gambling business like other business including Spotify, Netflix, and Amazon to mention a few, has transformed through artificial intelligence (AI) and will likely continue to be transformed in years to come as AI use becomes more exponential. The use of AI in these industries helps to learn habits, patterns, behaviour (sometimes even emotions), interactions and thus maximise the potential reach of services and products offered by these companies. In the online gambling arena however, AI can also introduce new forms of gambling or social betting, where AI could automate and create odds, set the pay-outs as well as target the atypical segment users for this form of betting/gambling.

AI technology is also a formidable ally to optimise customer service, prevent fraud, automate some regulatory compliance including AML and CFT checks. It can be used for on boarding, age controls, geolocation etc. AI is used as well to control behaviour of players to be able to monitor problematic gamblers and intervene before they elicit to self-exclude themselves. The self-exclusion process can also be handed through AI. AI also helps in using customer’s data more fruitfully and thus build better products and more personalised experiences for the players. Its full potential is still untapped, and we anticipate wider industry use of AI in the gambling sector over the coming years.

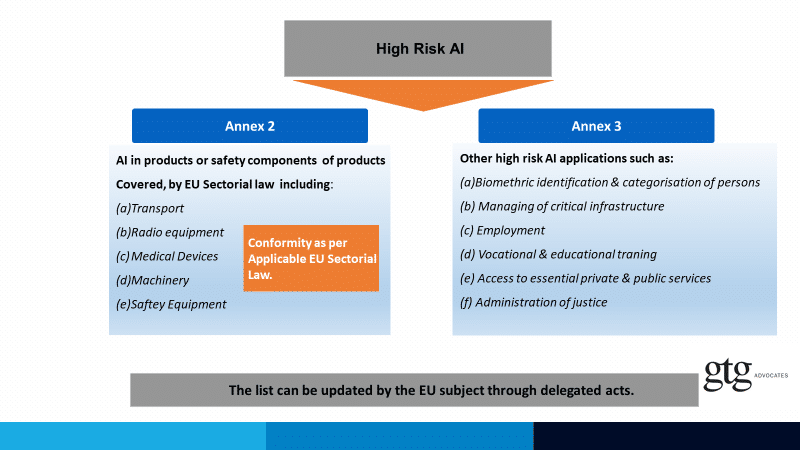

To this end, gambling operators need to follow the developments on the new draft EU regulation closely, as certain AI systems they deploy or plan to deploy could either fall within this category of High-risk AI in Annex 3 of the EU draft regulation:

In which case the gambling operator would need to follow the required obligations including potentially implementing technical parameters, transparency measures, risk management, human oversight as well as certification and registration.

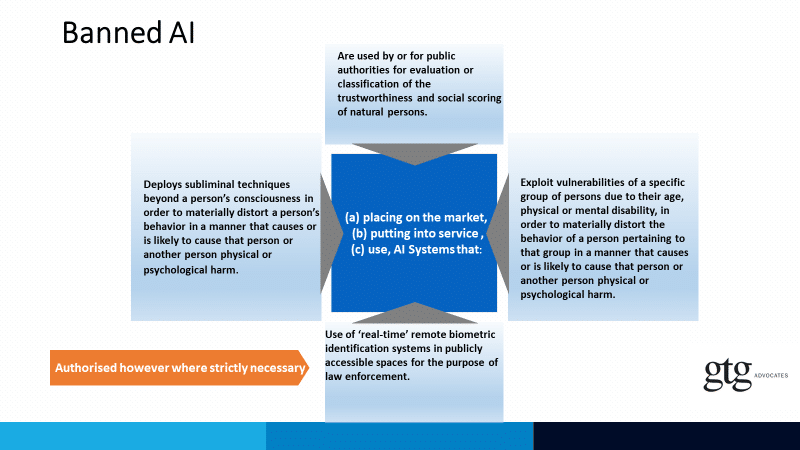

And or be captured by Article 5 as follows:

In addition to Article 5, captured above, the draft regulation also states in Recital 16 that :

The placing on the market, putting into service or use of certain AI systems intended to distort human behaviour, whereby physical or psychological harms are likely to occur, should be forbidden. Such AI systems deploy subliminal components individuals cannot perceive or exploit vulnerabilities of children and people due to their age, physical or mental incapacities. They do so with the intention to materially distort the behaviour of a person and in a manner that causes or is likely to cause harm to that or another person. The intention may not be presumed if the distortion of human behaviour results from factors external to the AI system which are outside of the control of the provider or the user. Research for legitimate purposes in relation to such AI systems should not be stifled by the prohibition, if such research does not amount to use of the AI system in human-machine relations that exposes natural persons to harm and such research is carried out in accordance with recognised ethical standards for scientific research.

For the time being the concrete analysis and identification of what exactly would be banned is very subjective and there are no guidelines or additional criteria to assist in a proper and coherent interpretation. We hope that before the draft regulation is rolled out in years to come, these are crystallised and maybe a scientifically backed risk model framework and matrix is adopted to guide organisations in assessing risk, the probability of the risk, severity of harm, and thus to see if the activity would be captured by the specific prohibitions mentioned above. This is crucial as otherwise aside from the lack of transparency, organisations would be left on their own to define these subjective factors with the fear of massive penalties , hanging on their necks.

The Draft Regulation, like the GDPR regime, provides for substantial fines in cases of non-compliance as follows:

- Developing and placing a blacklisted AI System on the market or putting it into service (up to EUR 30 million or 6% of the total worldwide annual turnover of the preceding financial year, whichever is higher).

- Failing to fulfil the obligations of cooperation with the national competent authorities, including their investigations (up to EUR 20 million or 4% of the total worldwide annual turnover of the preceding financial year, whichever is higher).

- Supplying incorrect, incomplete, or false information to notified entities (up to EUR 10 million or 2% of the total worldwide annual turnover of the preceding financial year, whichever is higher).

Operators should thus also monitor any specific measures implemented by member states like Malta which already cater for certain elements in the draft regulation in the national Law and thus could serve as an ideal test bed to increment the regulatory capture of the proposed regulation in due course. One of such measures in the draft regulations is the AI sandbox proposed in Article 53 (1):

AI regulatory sandboxes established by one or more Member States competent authorities or the European Data Protection Supervisor shall provide a controlled environment that facilitates the development, testing and validation of innovative AI systems for a limited time before their placement on the market or putting into service pursuant to a specific plan. This shall take place under the direct supervision and guidance by the competent authorities with a view to ensuring compliance with the requirements of this Regulation and, where relevant, other Union and Member States legislation supervised within the sandbox.

This is similar to the Maltese model of the technology sandbox which will soon be fully operative in Malta through the Malta Digital Innovation Authority.

Article by Dr Ian Gauci.

Disclaimer: This article is not intended to impart legal advice and readers are asked to seek verification of statements made before acting on them.